Squall supports all After Effects expression controls except for the layer picker. In our example we will make use of the point and 3D point control.

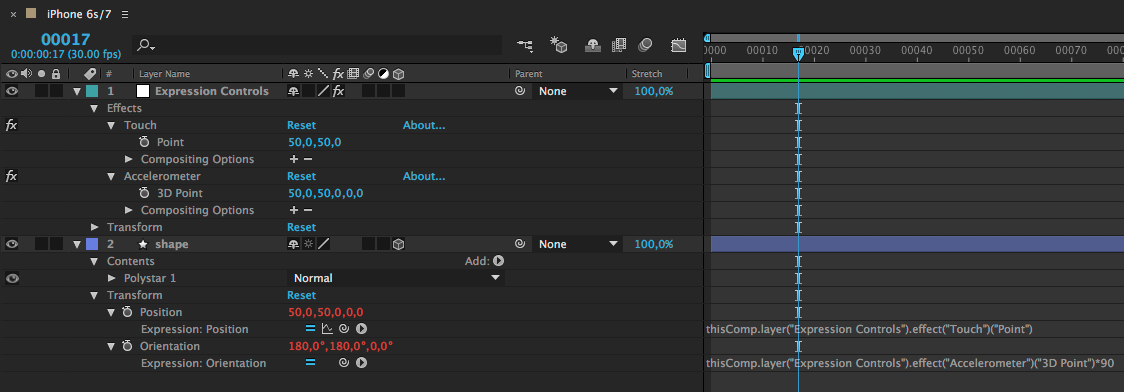

The null has two expression controls attached: A point called Touch and a 3D point called Accelerometer.

The polystar has an expression in its position attribute connecting the position to the point expression control in our null. And it has an expression in its orientation property connecting it to the 3D point control of that null.

Since the iOS accelerometer outputs values between -1 and 1 for each axis, we multiply the input by 90. Alternatively we could also multiply the input later when we ingest it in Xcode.

We retrieve our null layer with the AE name "Expression Controls". The method getLayersWithName returns an object conforming to the SLLayerProtocol. This object is guaranteed to be a CALayer (the API vends id/AnyObject in order to ensure Swift Objective-C compatibility).

SLLayerProtocol tells us that this object has an expressionControls array attached. That's the array we have to dig through to get to the properties we want to manipulate so we pass it to our ExpressionController class and that takes care of the rest.

let animation = SLSquallAnimation(fromBundle: "accelerometer.sqa")

self.view.layer.addSublayer(animation!)

self.view.backgroundColor = UIColor(red: 101.0/255.0, green: 44.0/255.0, blue: 218.0/255.0, alpha: 1.0)

let layer = animation!.getLayersWithName("Expression Controls")![0]

let controls = ExpressionController(frame: self.view.frame)

controls.animation = animation

controls.connectControlsFromProperties(properties: layer.expressionControls)

self.view.addSubview(controls)

In our ExpressionController we get the expressionControls array passed into the connectControlsFromProperties method. We iterate over the array and look for properties with the name "Touch" and "Accelerometer" respectively. Once we found our main properties, we iterate over their subproperties and look for the "Point" and "3D Point" property.

The names of the properties correspond to the ones in our After Effects expression.

func connectControlsFromProperties(properties : [SLProperty]) -> Void {

for p in properties {

switch p.name {

case "Touch":

for subProperty in p.subProperties {

if (subProperty.name == "Point") {

self.touchProperty = subProperty

}

}

break

case "Accelerometer":

for subProperty in p.subProperties {

if (subProperty.name == "3D Point") {

self.accelerationProperty = subProperty

startAccelerometer()

}

}

break

default:

break

}

}

}

When we get the touch callbacks we convert the touch position from the space in our ExpressionController to the root layer of the animation.

Our touch property is a point property and requires a CGPoint wrapped in a NSValue as input. If you are unsure what sort of value is required you could read out the value of the SLProperty and set a breakpoint (or print it to the console). Invalid values will throw an exception.

func onPan(_ pan : UIPanGestureRecognizer) {

if self.animation != nil {

let location = pan.location(in: self)

let touchPointInAnimation = self.animation!.rootLayer!.convert(location, from:self.layer)

self.touchProperty?.value = NSValue(cgPoint: touchPointInAnimation)

updateAnimationifNecessary()

}

}

Since we know which layer has has the expression attached we can just call evaluateExpressions on that specific layer.

private func updateAnimationifNecessary(){

if self.animation != nil && self.animation!.isPaused() {

self.animation?.getShapeLayer(withName: "shape")?.evaluateExpressions()

}

}

Since our accelerometer property is a 3D point we wrap our smoothed out data in an array and pass it to the value property.

private func startAccelerometer(){

if motionManager.isAccelerometerAvailable {

motionManager.startAccelerometerUpdates(to: OperationQueue.main, withHandler: {[unowned self] (data : CMAccelerometerData?, e : Error?) in

if data != nil {

let factor = 0.05;

self.accelerationX = data!.acceleration.x * factor + self.accelerationX * (1.0-factor)

self.accelerationY = data!.acceleration.y * factor + self.accelerationY * (1.0-factor)

self.accelerationZ = data!.acceleration.z * factor + self.accelerationZ * (1.0-factor)

self.accelerationProperty?.value = [self.accelerationY,self.accelerationX, self.accelerationZ]

self.updateAnimationifNecessary()

}

})

}

}